Building the Bedrock: A Guide to Testing Infrastructure

We unveil the core concepts driving our testing team’s success, from Automation Testing Framework to Behaviour Driven Development (BDD) and Continuous Integration. Explore how our meticulous approach ensures robust testing infrastructure for seamless project integration.

Written by Tanisha Dewan, Software Test Analyst at Tier 2 Consulting

At Tier 2 we take pride in making the most robust Testing Automation framework for functional testing.

These are the foundational concepts embraced by our Testing team:

A – Automation Testing Framework

B – Behaviour Driven Development (BDD)

C – Continuous Integration, Development, and Testing

D – Deployment of Tier 2 Test Framework to New Project

A – Automation Testing Framework

Imagine automation framework as the tester’s pen, scripting reusable libraries, defining coding standards, and weaving together a plotline of reporting mechanisms and test data management strategies. It becomes the backbone of the narrative, allowing the characters (developers and testers) to focus on creating a compelling software tale without being bogged down by the minutiae of repetitive manual tasks.

Sometimes it has been asked, why build your own rather than buy one off-shelf testing automation tool subscription?

Here are some points that would highlight how it is beneficial to have an in-house built framework:

- Cost-effective over time – initially, building a framework might take time and effort, but in the long run, it often pays off. It’s like investing in a high-quality tool that lasts longer and saves money compared to frequently buying cheaper, off-the-shelf solutions.

- Reduces the dependency on the third party and increases flexibility allowing us to tweak and adjust things as needed.

- More scalable – as we get more development projects and our testing needs increasing. An in-house framework can be scaled up or down according to these changing needs.

- Integration with existing tools – building their framework allows for seamless integration with these existing tools.

However, there are a few broad misconceptions.

Test automation is a powerful tool for improving the efficiency and effectiveness of software testing, but there are several misconceptions associated with it. Here are some common misconceptions about test automation:

- Automation can replace manual testing entirely: While automation can handle repetitive and predictable tasks, manual testing is still crucial for exploratory testing, usability testing, and scenarios that require human intuition.

- Test automation guarantees 100% coverage: achieving 100% test coverage is challenging and may not be practical in all cases. Automation can help cover a significant portion of the codebase, but some areas may still require manual testing.

- Test automation is a one-time investment: test automation requires ongoing maintenance and updates to adapt to changes in the application. New features, updates, and changes in requirements may necessitate adjustments to existing test scripts.

- Automated tests are inherently faster than manual tests: while automation can execute repetitive tasks quickly, developing and maintaining automated test scripts may initially take more time than manual testing. Additionally, automated tests may not be faster for small, one-time testing efforts.

- Automation eliminates the need for a testing strategy: test automation should complement a well-defined testing strategy. Organisations should still establish test objectives, prioritise test cases, and balance automated and manual testing based on the project’s needs

At Tier 2, embracing automation isn’t merely a choice; it’s a strategic imperative. Here’s how we extract the utmost value when embracing automation:

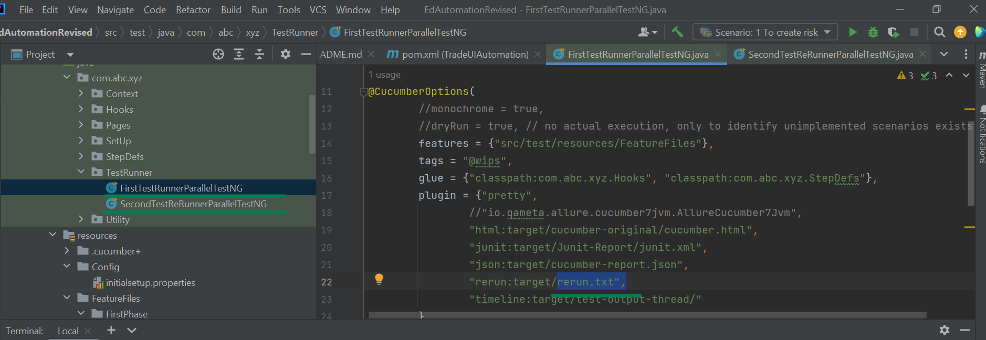

- Configurable test environment – with configurable environmental data, the test scripts can be written in a way that is independent of the specific environment details (e.g., URLs, database connections). This enables the same set of tests to be executed seamlessly across multiple environments.

- Parameterisation of test data: parameterising test data enhances test coverage and supports data-driven testing approaches Environmental data configuration includes parameters for test data, allowing our testing team to easily switch between datasets or input values without modifying the test scripts.

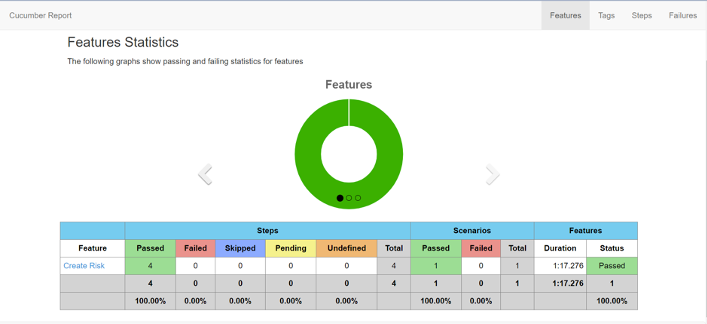

- Fully customised reports: our reports provide insights into test execution, results, and potential issues. Our well-structured and detailed reports are essential for effective collaboration, debugging, and decision-making.

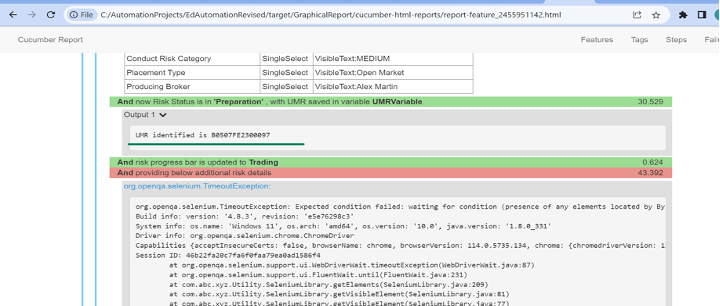

- Detailed logging: Tier 2 framework offers detailed logs. Logs are configured in a way to help understand the sequence of steps executed during a test. This traceability is crucial for recreating test scenarios, especially when dealing with complex test cases or intermittent failures.

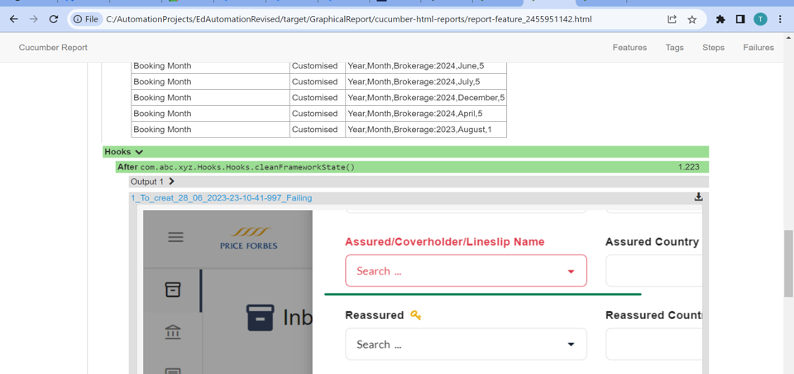

- Screenshots: we can attach screenshots of failed test steps to the report

- Automatic rerun of the failed test cases: test automation frameworks may occasionally produce false-positive results, indicating a failure even when the application under test is functioning correctly hence only failed test cases are configured to rerun to identify and address flakiness.

B – Behaviour-driven Development

AI can certainly help us write better BDD scenarios faster, but knowing what “good Gherkin” looks like is still essential.

AI supports and enhances communication and scenario writing, but it cannot replace collaboration or conversations that are at the heart of the BDD process. The goal of BDD is to build a common understanding so you can deliver valuable features faster, and Gherkin is a language we use to make sure everyone is on the same page.

In other words, ChatGPT can and will mislead you if you’re not careful or not sure how to write a perfect BDD scenario.

The renowned “given…when…then” notation may appear deceptively simple and intuitive, seemingly accessible to anyone for crafting exceptional Behaviour-Driven Development (BDD) scenarios. However, mastery of this art is not an instantaneous feat; rather, it requires a refined skill set and dedicated practice. The challenge lies in the fact that many teams grapple with the task of composing scenarios of high quality or find themselves entangled in a test suite replete with substandard scenarios.

Here are a few tips to create effective BDD scenarios:

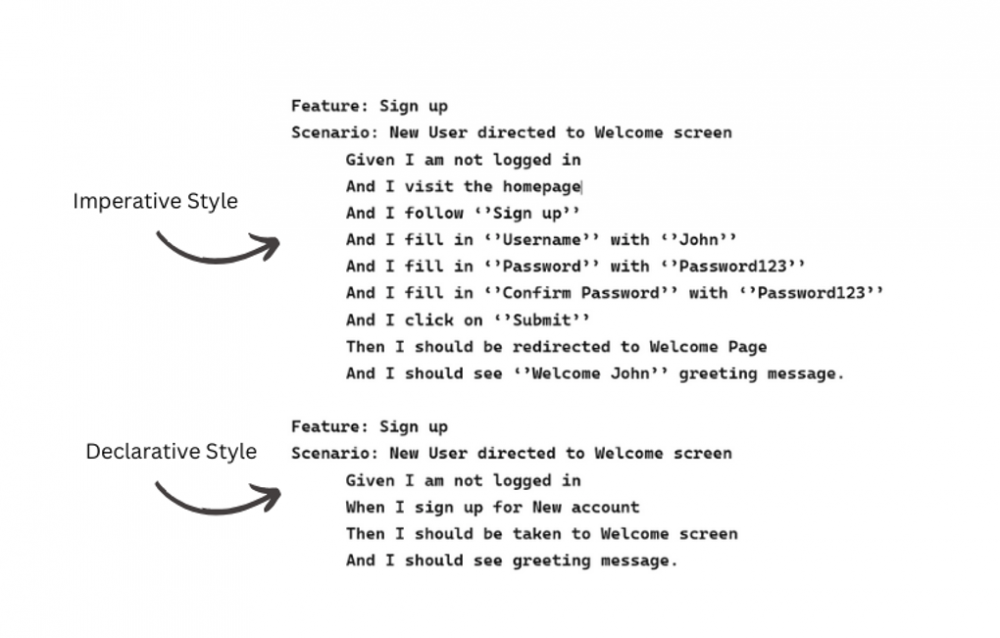

- Try not to use imperative language, but rather declarative language.

Imperative style doesn’t work so well with Cucumber.

- Don’t think of cucumber as a test scripting tool. There is a common misconception surrounding Cucumber, often perceived solely as a testing tool.

Unfortunately, this misunderstanding leads to the creation of Cucumber scenarios resembling conventional test scripts. It’s as if one is articulating testing instructions to another manual tester, following a step-by-step approach.

- Regrettably, such an approach is not conducive to effective Cucumber usage. The outcome tends to be a tangled web of fragile test scripts, resulting in decreased efficiency and a significant maintenance burden. It is crucial to recognise that Cucumber’s strength lies in its ability to express behaviour and collaboration, not to emulate traditional testing scripts. Adopting a more thoughtful and scenario-driven approach will undoubtedly yield more maintainable and robust outcomes.

- Don’t include too much information in a BDD scenario. Keep it clear and concise.

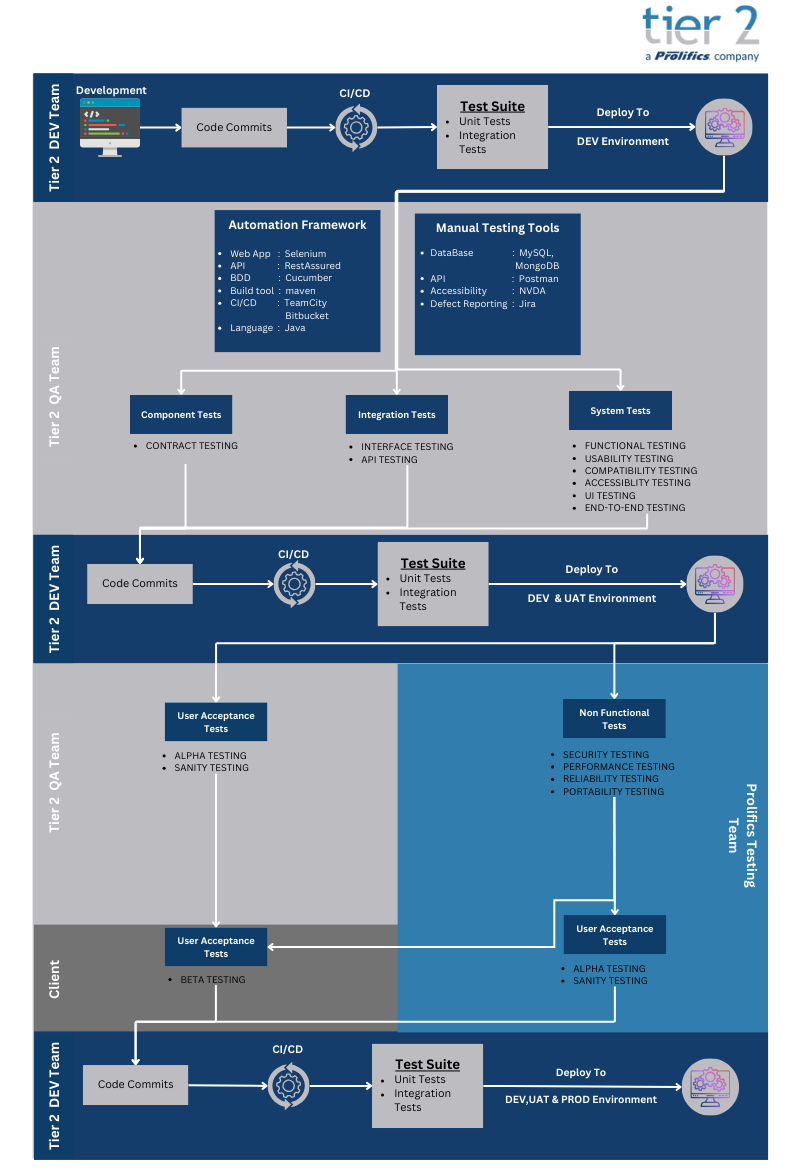

C – Continuous Integration, Development, and Testing

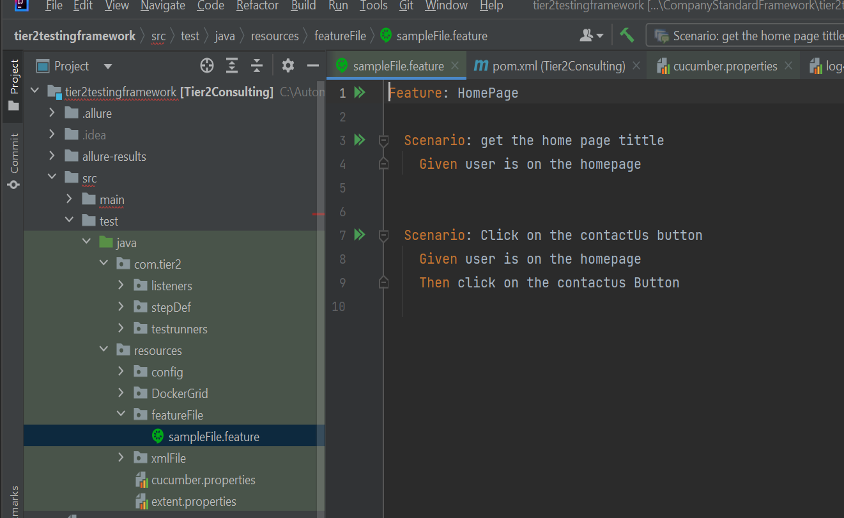

The flow diagram below depicts the continuous development and testing lifecycle at Tier 2:

Image created by Vishal Tailor, Software Tester at Tier 2 Consulting

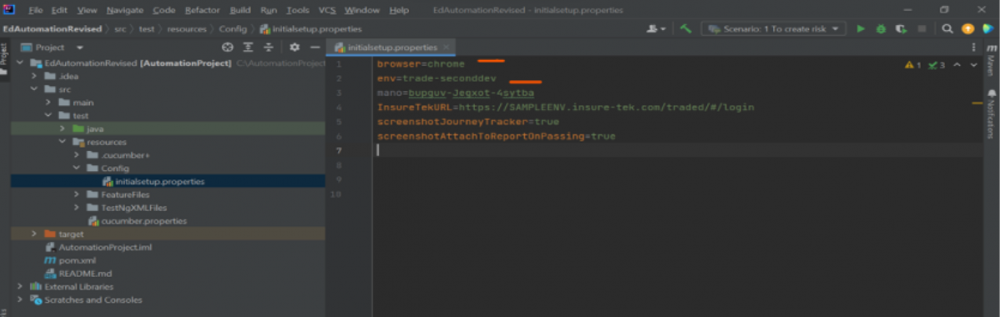

D – Deployment of Testing Framework to New Project

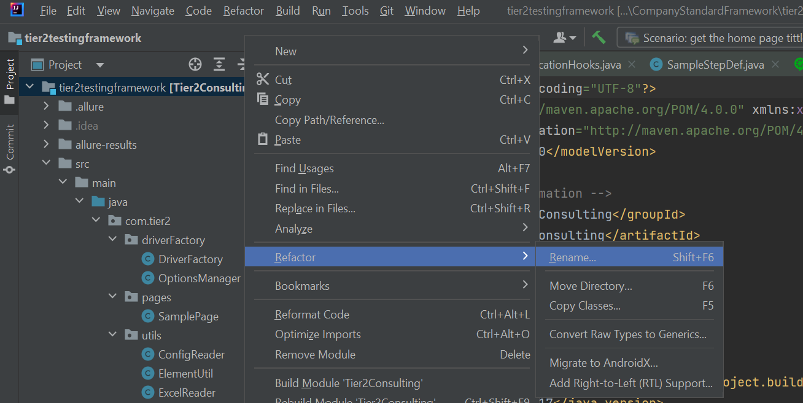

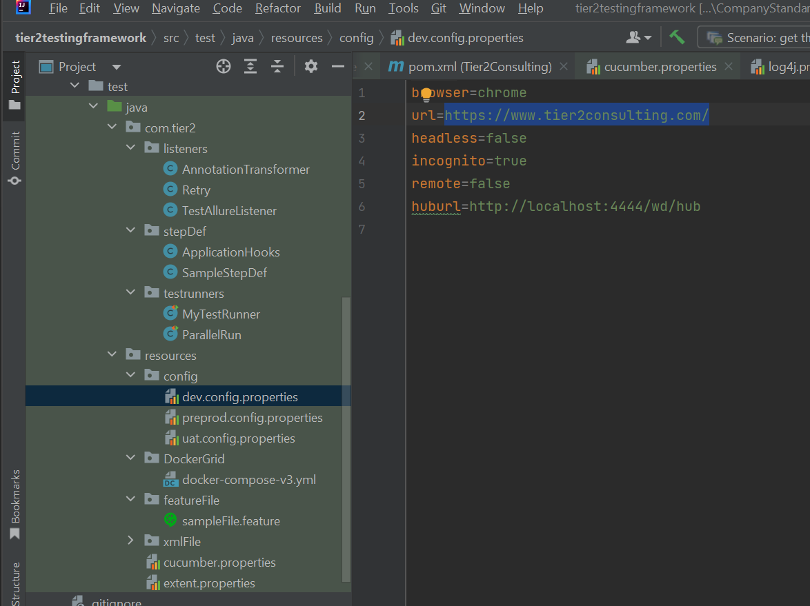

Our testing automation framework is thoughtfully crafted for effortless integration into any new project. In contemplating modifications, the process is inherently uncomplicated and direct.

- New users must pull the code for our skeleton framework.

- Rename the project to the new project name.

- Change the configuration file to the web URL under test.

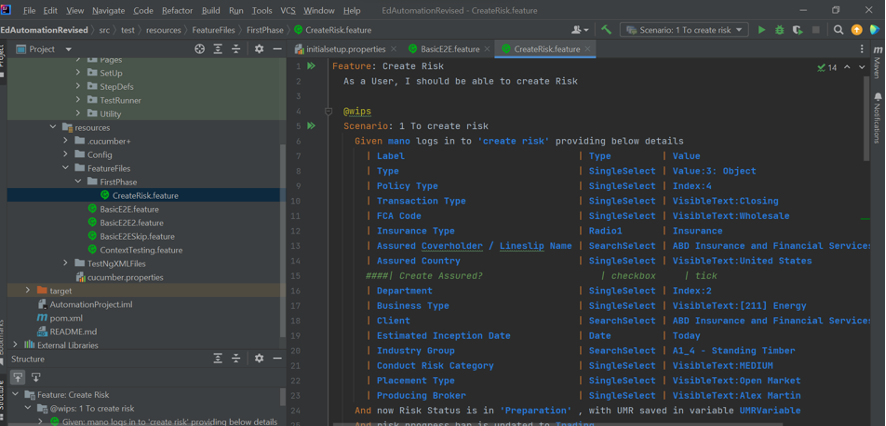

- Update the feature files based on the system under test.

That’s it!

We are good to start with automation testing on any new project.

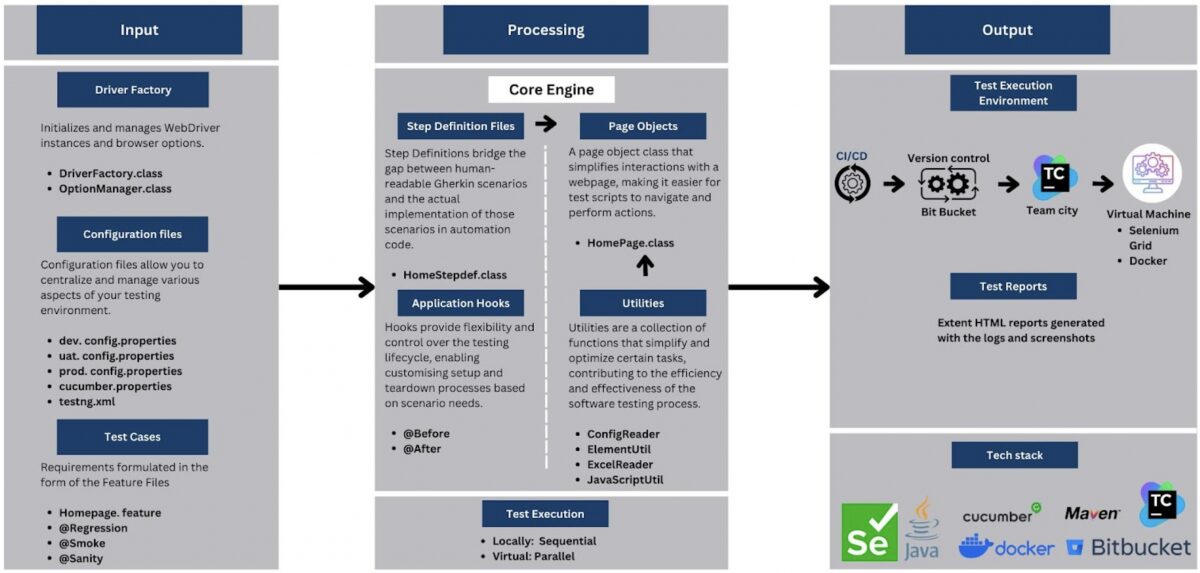

Tier 2 Automation Framework Architecture

The framework is structured with three pivotal components at its core: Input, Processing, and Output, as illustrated below:

Image created by Vishal Tailor, Software Tester at Tier 2 Consulting

Within the Input Component, a curated collection of data feeds is assembled to drive test initiation and execution. Test data is thoughtfully categorised into two distinct types: Environmental and Test-Specific. Examples of environment data include ‘BrowserType’ and ‘SIT,’ while ‘Client Name’ and ‘FileToUpload’ exemplify test-specific data. Tests manifest as scenarios, articulated with declarative language and enveloped by pertinent test data. These scenarios are further enriched by classification tags, encompassing testing types (e.g., ‘Smoke,’ ‘Regression’) and functional aspects (‘Order,’ ‘Account’), or even environmental nuances (‘SIT,’ ‘NFT’).

The Processing Component handles incoming data, orchestrating the seamless execution of scenarios in the specified environment and browser. StepDefinitions encapsulate the implementation of scenarios, delegating interactions and assertions between logically mapped PageObjects. Supporting this, various Utility classes ensure the efficacy of Page Objects. Hooks play a crucial role, executing pre-identified tasks before and after each scenario, such as browser management, session handling, and data setup/teardown activities. The execution strategy, whether sequential or parallel, is tailored based on application session management and the complexity of the Application Under Test (AUT).

The Output Component takes charge of scheduled or ad-hoc executions within the CI/CD pipeline, operating in a meticulously controlled environment. Code retrieval from the repository precedes the execution of tests across a Selenium grid, facilitating test distribution. Docker ensures a standardised, agreed-upon environment, primed for test execution. The resulting test report encapsulates comprehensive testing statistics, complemented by screenshots and detailed logs.

Post-execution, results undergo meticulous analysis, potentially necessitating remediation. Stakeholders can stay informed through daily reports, either shared directly or subscribed to by consumers. This holistic approach ensures a refined and robust testing framework that seamlessly integrates into the software development lifecycle.

Conclusion

We have discussed how effective automation requires meticulous planning by skilled software testers. While AI aids in generating Behaviour-Driven Development (BDD) scripts, the crucial quality check relies on testers’ BDD proficiency. Also, an ideal framework facilitates seamless integration into new projects with minimal effort.

How can Tier 2 help?

Our proficient team of software testers is your comprehensive solution for all testing requirements – be it automation or manual, functional or nonfunctional. Expertise at Tier 2 allows for seamless integration of automation frameworks, ensuring a swift initiation of automation for any new project with minimal effort.